TSCC¶

TSCC houses our 640-core supercomputer as part of a condo resource sharing system which allows other researchers (mostly bioinformaticians from the Ideker, Ren, Zhang labs) to use our portion for their jobs (with an 8 hour time limit) which allows us to use their portions when we need extra computing power. We lead the pack in terms of pure crunching power, with the Ideker lab in close second place with 512 cores. But they have 2x the RAM per core for jobs that require lots of memory, so our purchases are complimentary and sharing is encouraged.

The main contacts for questions about TSCC are the dry lab and TSCC users mailing lists. The main contact for problems with TSCC is Jim Hayes

Important rules¶

Warning

- All sequencing data is stored in the

/projects/ps-yeolab/seqdatafolder - The folder

seqdatais intended as permanent storage and no folders or files there should ever be deleted - Do not process data in

seqdata. Use the directory structure described in Organize your home directory to create ascratchfolder for all data processing.

First Steps¶

Your first login session should include some of the following commands, which will familiarize you with the cluster, teach you how to do some useful tasks on the queue, and help you set up a common directory structure shared by everyone in the lab.

Log on!¶

First, log in to TSCC!

ssh YOUR_TSCC_USERNAME@tscc-login2.sdsc.edu

TSCC has two login nodes, login1 and login2 for load-balancing (i.e.

so if you just log on to tscc.sdsc.edu, it’ll choose whichever login

node is less occupied. We’re logging in to a specific node because then

we’ll always have our screen session on the same node) This is logging

specifically on to login2. You can do login1 if you like, as well,

to balance it out :)

Start a screen session¶

NOTE - You can skip this on your initial setup, but you should come back and do this later because it is cool.

Screen is an awesome tool which allows you to have multiple “tabs” open in

the same login session, and you can easily transition between screens. Plus,

they’re persistent, so you can leave something running in a screen session,

log out of TSCC, and it will still be running! Amazing! If you have

suggestions of things to add to this .screenrc, feel free to make a pull

request on Olga’s rcfiles github repo.

To get a nice status bar at the bottom of your terminal window, get this

.screenrc file:

cd

wget https://raw.githubusercontent.com/olgabot/rcfiles/master/.screenrc

Note

The control letter is j, not a in the documentation above,

so for example to create a new window, do Ctrl-j c and to kill the

current window, do Ctrl-j k. Do Ctrl-j j to switch between

windows, and Ctrl-j #, where # is some window number,

to switch to a numbered tab specifically (e.g. Ctrl-j 2 to switch to

tab number 2.

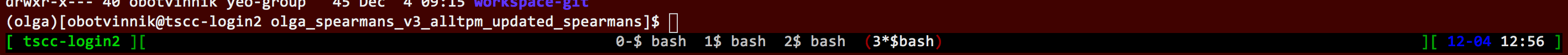

This .screenrc adds a status bar at the bottom of your screen, like this:

Now to start a screen session do:

screen

If you’re re-logging in and you have an old screen session, do this to “re-attach” the screen window.

screen -x

Every time you log in to TSCC, you’ll want to reattach the screens from

before, so the first step I always take when I log in to TSCC is exactly

that, screen -x.

Get gscripts access to software¶

- Before you’re able to clone the gscripts github repo, you’ll need to add your ssh keys on TSCC to your Github account. Follow Github’s instructions for generating SSH keys.

- First, clone the

gscriptsgithub repo to your home directory on TSCC (this assumes you’ve already created a github account).

# <on TSCC>

cd

git clone http://github.com/YeoLab/gscripts

- Add this line to the end of your

~/.bashrcfile (using eitheremacsorvi/vim, your choice)

source ~/gscripts/bashrc/tscc_bash_settings_current

Note

Make sure to add source ~/gscripts/bashrc/tscc_bash_settings_current

to your ~/.bashrc file so that it always loads up the correct yeolab

environment variables!

- “source the

.bashrcfile so you load all the convenient environment variables we’ve created.

- “source the

source ~/.bashrc

Download and install anaconda¶

Download the Anaconda Python/R package manager using wget (web-get). The link below is from the Anaconda downloads page.

wget http://repo.continuum.io/archive/Anaconda2-4.1.1-Linux-x86_64.sh

To install ANaconda, run the shell script with bash (this will take some time). It will ask you a bunch of questions, and use the defaults for them (press enter for all)

bash Anaconda2-4.1.1-Linux-x86_64.sh

To activate anaconda, source your .bashrc:

source ~/.bashrc

Make sure your Python is point to the Anaconda python with:

which python

The output should look something like:

~/anaconda2/bin/python

Make a virtual environment on TSCC¶

WARNING - this is easy to get messed up. While this is a nice tool, it is not absolutely necessary upon initial setup and might be best to wait and configure environments after you have a better understanding of how they work.

On TSCC, the easiest way to create a virtual evironment (aka virtualenv)

is by making one off of the base environment, which already has a bunch of

modules that we use all the time (numpy, scipy, matplotlib, pandas, scikit-learn, ipython, the list goes on). Here’s how you do it:

Note

The command $USER is meant to be literal, meaning you can exactly copy

the below command, and TSCC will create an environment with your username.

If you don’t believe me, compare the output of:

echo USER

to the output of:

echo $USER

The second one should output your TSCC username, because the $ dollar

sign indicates to the shell that you’re asking for the variable $USER,

not the literal word “USER”.

conda create --clone base --name $USER

Note

You can also create an environment from scratch using conda to install

all the Anaconda Python packages, and then using pip in the environment

to install the remaining packages, like so:

conda create --yes --name ENVIRONMENT_NAME pip numpy scipy cython matplotlib nose six scikit-learn ipython networkx pandas tornado statsmodels setuptools pytest pyzmq jinja2 pyyaml pymongo biopython markupsafe seaborn joblib semantic_version

source activate ENVIRONMENT_NAME

conda install --yes --channel https://conda.binstar.org/daler pybedtools

conda install --yes --channel https://conda.binstar.org/kyleabeauchamp fastcluster

pip install gspread brewer2mpl husl gffutils matplotlib-venn HTSeq misopy

pip install https://github.com/YeoLab/clipper/tarball/master

pip install https://github.com/YeoLab/gscripts/tarball/master

pip install https://github.com/YeoLab/flotilla/tarball/master

These commands is how the base environment was created.

Then activate your environment with

source activate $USER

You’ll probably stay in this environment all the time.

Warning

Make sure to add source activate $USER to your ~/.bashrc file!

Then you will always be in your environment

If you need to switch to another environment, then exit your environment with:

source deactivate

Note

Now that you’ve created your own environment, go to your gscripts folder and install your own personal gscripts, to make sure it’s the most updated version.

cd ~/gscripts

pip install . # The "." means install "this," as in "this folder where I am"

Add the location of GENOME to your ~/.bashrc¶

To run the analysis pipeline, you will need to specify where the genomes are

on TSCC, and you can do this by adding this line to your ~/.bashrc:

GENOME=/projects/ps-yeolab/genomes

Organize your home directory¶

Create an organized home directory structure following a common

template, so others can find your scripts, workflows,

and even final results/papers! Do not store actual data in your home

directory as is is limited to 100 GB only.

Link your scratch directory to your home¶

The “scratch” storage on TSCC is for temporary (after 90 days it gets

purged) storage. It’s very useful for storing intermediate files,

and outputs from compute jobs because the data there is stored on

solid-state drives (SSDs, currently 300TB) which have incredibly fast

read-write speeds, which is perfect for outputs from alignment algorithms.

It can be annoying to go back and forth between your scratch directory,

so it’s convenient to have a link to your scratch from home,

which you can make like this:

ln -s /oasis/tscc/scratch/$USER $HOME/scratch

Note

This is virtually unlimited temporary storage space, designed for heavy I/O. Aside from common reference files (e.g. Genomes, GENCODE, etc.) this should be the only space that you can read/write to from your scripts/workflows! The ‘’‘parallel’‘’ throughput of this storage is 100 GB/s (e.g. 10 tasks can each read/write at 10 GB/s at the same time)

Warning

Anything saved here is subject to deletion without warning after 3 months

or less of storage. In particular, the large .sam and .bam files

can get deleted, even though their .done files (produced by the

GATK Queue RNA-seq pipeline as a placeholder) will still exist, and they

will seem done to the pipeline. To avoid lost data, here are a few steps:

Keep your metadata sample/cell counts are in your

$HOME/projectsor/projects/ps-yeolab/$USERfolder, which don’t get purged periodically.Delete

*.donefiles when re-rerunning a partially eroded pipeline run.Use this recursive touch command to “refresh” the decay clock on your files before important meetings and re-analysis steps:

cd important_scratch_dir find . | xargs touch

Create workflow and projects folders¶

Create ~/workflows for your personal bash, makefile, queue, and so on,

scripts, before you add them to gscripts, and ~/projects for your

projects to organize figures, notebooks, final results, and even manuscripts.

mkdir ~/workflows ~/projects

Here’s an example project directory structure:

$ ls -lha /home/gpratt/projects/fox2_iclip/

total 9.5K

drwxr-xr-x 2 gpratt yeo-group 5 Sep 16 2013 .

drwxr-xr-x 40 gpratt yeo-group 40 Nov 24 12:20 ..

lrwxrwxrwx 1 gpratt yeo-group 49 Aug 21 2013 analysis -> /home/gpratt/scratch/projects/fox2_iclip/analysis

lrwxrwxrwx 1 gpratt yeo-group 45 Aug 21 2013 data -> /home/gpratt/scratch/projects/fox2_iclip/data

lrwxrwxrwx 1 gpratt yeo-group 50 Aug 21 2013 scripts -> /home/gpratt/processing_scripts/fox2_iclip/scripts

Note

Notice that all of these are soft-links to either ~/scratch or some

other processing scripts.

Let us see your stuff¶

Make everything readable by other yeo lab members and restrict access from other users (per HIPAA/HITECH requirements)

chmod -R g+r ~/

chmod -R g+r ~/scratch/

chmod -R o-rwx ~/

chmod -R o-rwx ~/scratch/

But git will get mad at you if your ~/.ssh keys private keys are visible

by others, so make them visible to only you via:

chmod -R go-rwx ~/.ssh/

In the end, your ‘’‘home’‘’ directory should look something like this:

$ ls -l $HOME

lrwxrwxrwx 1 bkakarad yeo-group 29 Jun 24 2013 scratch -> /oasis/tscc/scratch/bkakarad/

drwxr-x---+ 2 bkakarad yeo-group 2 Jun 24 2013 gscripts

drwxr-x---+ 3 bkakarad yeo-group 3 Jun 24 2013 projects

drwxr-x---+ 2 bkakarad yeo-group 2 Jun 24 2013 workflows

IPython notebooks on TSCC¶

This has two sections: Setup and Running. They should be done in order :)

Setup IPython notebooks on TSCC¶

- First, on your personal computer,

you will want to set up

passwordless ssh from your laptop to TSCC. For reference,

a@Ais you from your laptop, andb@Bis TSCC. So everywhere you seeb@B, replace that withyourusername@tscc.sdsc.edu. Fora@A, since your laptop likely doesn’t have a fixed IP address or a way to log in to it, you don’t need to worry about replacing it. Instead, usea@Aas a reference point for whether you should be doing the command from your laptop (a@A) or TSCC (b@B) - To set up IPython notebooks on TSCC, you will want to add some

aliasvariables to your~/.bash_profile(for Mac) or~/.bashrc(for Linux)

IPYNB_PORT=[some number above 1024]

alias tscc='ssh obotvinnik@tscc-login2.sdsc.edu'

This way, I can just type tscc and log onto tscc-login2

specifically. It is important for IPython notebooks that you always log

on to the same node. You can use tscc-login1 instead, too,

this is just what I have set up. Just replace my login name

(“obotvinnik”) with yours.

- To activate all the commands you just added, on your laptop, type

source ~/.bash_profile. (sourceis a command which will run all the lines in the file you gave it, i.e. here it will assign the variableIPYNB_PORTto the value you gave it, and run thealiascommand so you only have to typetsccto log in to TSCC)

Next, type

tsccand log on to the server.On TSCC, add these lines to your

~/.bashrcfile.IPYNB_PORT=same number as the above IPYNB_PORT from your laptop alias ipynb="ipython notebook --no-browser --port $IPYNB_PORT &" alias sshtscc="ssh -NR $IPYNB_PORT:localhost:$IPYNB_PORT tscc-login2 &"

Notice that in

sshtscc, I use the same port as I logged in to, tscc-login2. The ampersands “&” at the end of the lines tell the computer to run these processes in the background, which is super useful.You’ll need to run

source ~/.bashrcagain on TSCC, so the$IPYNB_PORTvariable, andipynb,sshtsccaliases are available.Set up passwordless ssh between the compute nodes and TSCC with:

cat .ssh/id_rsa.pub >> .ssh/authorized_keys

Back on your home laptop, edit your ~/.bash_profile on macs, ~/.bashrc for other unix machines to add the line:

alias tunneltscc="ssh -NL $IPYNB_PORT\:localhost:$IPYNB_PORT obotvinnik@tscc-login2.sdsc.edu &"

Make sure to replace “

obotvinnik” with your TSCC login :) It is also important that these are double-quotes and not single-quotes, because the double-quotes evaluate the$IPYNB_PORTto the number you chose, e.g.4000, whereas the single-quotes will keep it as the letters$IPYNB_PORT.

Run IPython Notebooks on TSCC¶

Now that you have everything configured, you can run IPython notebooks on TSCC! Here are the steps to follow.

1. Log on to TSCC

4. Now that you have those set up, start up a screen session, which allows you to have something running continuously, without being logged in.

screen -x

Note

If this gives you an error saying “There is no screen to be attached”

then you need to run plain old screen (no -x) first.

If this gives you an error saying you need to pick one session, make

life easier for yourself and pick one to kill all the windows in,

(using Ctrl-j K if you’re using the .screenrc that I recommended

earlier, otherwise the default is Ctrl-a K). Once you’ve killed all

screen sessions except for one, you can run screen -x with abandon,

and it will connect you to the only one you have open.

- In this

screensession, now request an interactive job, e.g.:

qsub -I -l walltime=2:00:00 -q home-yeo -l nodes=1:ppn=2

- Wait for the job to start.

- Run your TSCC-specific aliases on the compute node:

ipynb

sshtscc

- Back on your laptop, now run your tunneling command:

tunneltscc

- Open up

http://localhost:[YOUR IPYNB PORT]on your browser.

Installing and upgrading Python packages¶

To install Python packages first try conda install:

conda install <package name>

If there is no package in conda, then try bioconda (a google search for your package along with the keyword “bioconda” will tell you if this is available):

conda install -c bioconda <package name>

If there is no package in conda, then (and ONLY then) try pip:

pip install <package name>

To upgrade packages, do:

(using conda)

conda update <package name>

(using pip)

pip install -U <package name>

NOTE - you can see if your package is correctly installed in your anaconda with:

which <package name>

Alternatively, you can open python on your command line with:

python

And then try to import the package you just installed. If it doesn’t throw an error, it installed successfully!

import <package name>

To get out of python on your command line:

quit()

Installing R packages (beta!)¶

You can also use conda to install R and R packages. This will allow you to access a jupyter notebook in R rather than python code which can be VERY helpful for some analysis software that runs in R.

conda install -c r r-essentials

After the install, load your jupyter notebooks and start a new notebook. You should see options avilable to choose between Python2 and R.

Submitting and managing compute jobs on TSCC¶

Submit jobs¶

To submit a script that you wrote, in this case called myscript.sh,

to TSCC, do:

qsub -q home-yeo -l nodes=1:ppn=2 -l walltime=0:30:00 myscript.sh

Submit interactive jobs¶

To submit interactive jobs, do:

qsub -I -q home-yeo -l nodes=1:ppn=2 -l walltime=0:30:00

Submit jobs to home-scrm¶

To submit to the home-scrm queue, add -W group_list=scrm-group to

your qsub command:

qsub -I -l walltime=0:30:00 -q home-scrm -W group_list=scrm-group

Submitting many jobs at once¶

If you have a bunch of commands you want to run at once,

you can use this script to submit them all at once. In the next example,

commands.sh is a file has the commands you want on their own line,

i.e. one command per line.

java -Xms512m -Xmx512m -jar /home/yeo-lab/software/gatk/dist/Queue.jar \

-S ~/gscripts/qscripts/do_stuff.scala --input commands.sh -run -qsub \

-jobQueue <queue> -jobLimit <n> --ncores <n> --jobname <name> -startFromScratch

This runs a scala job that submits sub-jobs to the PBS queue under name you fill in where <name> now sits as a placeholder.

Check job status, aka “why is my job stuck?”¶

Check the status of your jobs:

qme

Note

This will only work if you have followed instructions and have

source‘d the ~/gscripts/tscc_bash_settings_current :)

qme outputs,

(olga)[obotvinnik@tscc-login2 ~]$ qme

tscc-mgr.sdsc.edu:

Req'd Req'd Elap

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

----------------------- ----------- -------- ---------------- ------ ----- ------ ------ --------- - ---------

2006527.tscc-mgr.local obotvinnik home-yeo STDIN 35367 1 16 -- 04:00:00 R 02:35:36

2007542.tscc-mgr.local obotvinnik home-yeo STDIN 6168 1 1 -- 08:00:00 R 00:28:08

2007621.tscc-mgr.local obotvinnik home-yeo STDIN -- 1 16 -- 04:00:00 Q --

Check job status of array jobs¶

Check the status of your array jobs, you need to specify -t to see the

status of the individual array pieces.

qstat -t

Kill an array job¶

If the job is an array job, you’ll need to add brackets, like this:

qdel 2006527[]

Which queue do I submit to? (check status of queues)¶

Check the status of the queue (so you know which queues to NOT submit to!)

qstat -q

Example output is,

(olga)[obotvinnik@tscc-login2 ~]$ qstat -q

server: tscc-mgr.local

Queue Memory CPU Time Walltime Node Run Que Lm State

---------------- ------ -------- -------- ---- --- --- -- -----

home-dkeres -- -- -- -- 2 0 -- E R

home-komunjer -- -- -- -- 0 0 -- E R

home-ong -- -- -- -- 2 0 -- E R

home-tg -- -- -- -- 0 0 -- E R

home-yeo -- -- -- -- 3 1 -- E R

home-visres -- -- -- -- 0 0 -- E R

home-mccammon -- -- -- -- 15 29 -- E R

home-scrm -- -- -- -- 1 0 -- E R

hotel -- -- 168:00:0 -- 232 26 -- E R

home-k4zhang -- -- -- -- 0 0 -- E R

home-kkey -- -- -- -- 0 0 -- E R

home-kyang -- -- -- -- 2 1 -- E R

home-jsebat -- -- -- -- 1 0 -- E R

pdafm -- -- 72:00:00 -- 1 0 -- E R

condo -- -- 08:00:00 -- 18 6 -- E R

gpu-hotel -- -- 336:00:0 -- 0 0 -- E R

glean -- -- -- -- 24 75 -- E R

gpu-condo -- -- 08:00:00 -- 16 36 -- E R

home-fpaesani -- -- -- -- 4 2 -- E R

home-builder -- -- -- -- 0 0 -- E R

home -- -- -- -- 0 0 -- E R

home-mgilson -- -- -- -- 0 4 -- E R

home-eallen -- -- -- -- 0 0 -- E R

----- -----

321 180

So right now is not a good time to submit to the hotel queue,

since it has a bunch of both running and queued jobs!

Show available “Service Units”¶

List the available Service Units (1 SU = 1 core*hour) ... for a quick ego boost. Also note that our supercomputer is separated in two: yeo-group and scrm-group, but the total balance is 5.29 million SU, just enough secure us the top honors :-)

gbalance | sort -nrk 3 | head

Id Name Amount Reserved Balance CreditLimit Available

-- -------------------- ------- -------- ------- ----------- ---------

19 tideker-group 5211035 27922 5183113 0 5183113

82 yeo-group 3262925 0 3262925 0 3262925

81 scrm-group 2039328 0 2039328 0 2039328

14 mgilson-group 663095 208000 455095 0 455095

73 nanosprings-ucm 650000 0 650000 0 650000

17 kkey-group 635056 7104 627952 0 627952

16 k4zhang-group 534430 0 534430 0 534430

List the available TORQUE queues, for a quick boost in motivation!

qstat -q

Queue Memory CPU Time Walltime Node Run Que Lm State

---------------- ------ -------- -------- ---- --- --- -- -----

home-tideker -- -- -- 16 1 0 -- E R

home-visres -- -- -- 1 0 0 -- E R

hotel -- -- 72:00:00 -- 25 18 -- E R

home-k4zhang -- -- -- 4 21 0 -- E R

home-kkey -- -- -- 5 0 0 -- E R

pdafm -- -- 72:00:00 -- 0 0 -- E R

condo -- -- 08:00:00 -- 0 0 -- E R

glean -- -- -- -- 0 0 -- E R

home-builder -- -- -- 8 0 0 -- E R

home -- -- -- -- 0 0 -- E R

home-ewyeo -- -- -- 15 0 0 -- E R

home-mgilson -- -- -- 8 0 0 -- E R

----- -----

47 18

Show specs of all nodes¶

pbsnodes -a

Uploading Data to Amazon S3 buckets¶

- Create an AWS AIM user

Sign into AWS Console https://console.aws.amazon.com/ and click on Identity & Access Management (IAM)

- On the left, click Users

- At the top, click Create New Users

- Create a username and generate an access key

- Click Create in the lower right.

- On the next page, click Download Credentials in the lower right to download the access key for your new user

- Safeguard the downloaded file. It contains a key which is like a password for the AWS account.

- Click Close in the lower right.

- While still in the IAM Users page, cluck on the newly-created user’s name.

- Click the Permissions tab

- Under Manages Policies, click Attach Policy

- Type “S3” in the Gilter fiels and check the AmazonS3FullAccess policy.

- Click Attach Polucy in the power right.

- Now your username is setip to use AWS S3 services.

- Download and install the AWS Command Line Interface (CLI)

- Download from https://aws.amazon.com/cli/

- Open a terminal. Run:

- ..code::

- aws configure

- Fill in the AWS Access Key ID and AWS Secret Access Key when prompted using the information downloaded in the credentials file above.

- Pick an appropriate region name: http://docs.aws.amazon.com/general/latest/gr/rande.html

- I used the default output format by not entering any information when prompted at that step.

- Create a new Bucket

- Login to your AWS accounts. Click on Services -> S3

- In the upper left hand corner, click “Create Bucket”

- Name your bucket as you please!

- From the command line, upload your data to the newly created bucket.

- You can find information about the available commands here: http://docs.aws.amazon.com/cli/latest/userguide/using-s3-commands.html

- This is a sample of the command I used to upload my data:

..code:

aws s3 sync --acl bucket-owner-full-control --sse AES256 /home/ecwheele/scratch/mds_splicing_v4/final_bams/ s3://mds-splicing-v4-bams

Random notes¶

Software goes in /projects/ps-yeolab/software

Make sure to recursively set group read/write permissions to the software directory so others can use and update the common software, using:

chmod ug+rw /projects/ps-yeolab/software

If your’e installing something from source and using ./configure

and make and all that, then always set the flag

--prefix=/projects/ps-yeolab/software when you run ./configure

./configure --prefix /projects/ps-yeolab/software

When possible install bins to /projects/ps-yeolab/software/bin

Running RNA-seq, CLIP-Seq, Ribo-Seq, etc qscripts GATK Queue pipelines¶

We use the Broad Institute’s Genome Analysis Toolkit (GATK) Queue software to run our pipelines. This software solves a lot of problems for us, such as dealing with multiple-stage pipelines that have cross-dependencies (e.g. you can’t calculate splicing until you’ve mapped the reads, and you can’t map the reads until after you’ve removed adapters and repetitive genomic regions from them), and properly scheduling jobs so that one person’s analysis doesn’t completely take over the compute cluster.

Gabe has created a bunch of helpful template scripts for GATK Queue in his

folder /home/gpratt/templates:

$ ls -lh /home/gpratt/templates

total 26K

-rwxr-xr-x 1 gpratt yeo-group 660 May 7 2014 bacode_split.sh

-rwxr-xr-x 1 gpratt yeo-group 554 May 7 2014 bacode_split.sh~

-rwxr-xr-x 1 gpratt yeo-group 524 Sep 18 00:08 #clipseq.sh#

-rwxr-xr-x 1 gpratt yeo-group 524 Jul 12 2014 clipseq.sh

-rwxr-xr-x 1 gpratt yeo-group 516 Mar 26 2014 clipseq.sh~

-rwxr-xr-x 1 gpratt yeo-group 473 Aug 21 18:47 riboseq.sh

-rwxr-xr-x 1 gpratt yeo-group 528 Aug 21 18:46 riboseq.sh~

-rwxr-xr-x 1 gpratt yeo-group 530 Sep 5 17:29 rnaseq.sh

-rwxr-xr-x 1 gpratt yeo-group 527 Mar 26 2014 rnaseq.sh~

Each Queue job requires a manifest file with a list of all files to process, and the genome to process them on.

Warning

All further instructions depend on you having followed the directions in Create workflow and projects folders, where for this particular project, you’ve created these folders:

~/projects/PROJECT_NAME

~/processing_scripts/PROJECT_NAME/scripts

~/scratch/PROJECT_NAME/data

~/scratch/PROJECT_NAME/analysis

And that you’ve linked the scratch and home directories correctly. For

example, here’s how you can create the project directory structure for a

project called singlecell_pnms:

NAME=singlecell_pnms

mkdir -p ~/projects/$NAME ~/scratch/$NAME ~/scratch/$NAME/data ~/scratch/$NAME/analysis ~/processing_scripts/$NAME/scripts

ln -s ~/scratch/$NAME/data ~/projects/$NAME/data

ln -s ~/scratch/$NAME/analysis ~/projects/$NAME/analysis

ln -s ~/processing_scripts/$NAME/scripts ~/projects/$NAME/scripts

Here’s an example queue script for single-end, not strand-specific RNA-seq,

from the file singlecell_pnms_se_v3.sh:

#!/bin/bash

NAME=singlecell_pnms_se

VERSION=v3

DIR=singlecell_pnms

java -Xms512m -Xmx512m -jar /projects/ps-yeolab/software/gatk/dist/Queue.jar -S $HOME/gscripts/qscripts/analyze_rna_seq.scala --input ${NAME}_${VERSION}.txt --adapter TCGTATGCCGTCTTCTGCTTG --adapter ATCTCGTATGCCGTCTTCTGCTTG --adapter CGACAGGTTCAGAGTTCTACAGTCCGACGATC --adapter GATCGGAAGAGCACACGTCTGAACTCCAGTCAC -qsub -jobQueue home-yeo -runDir ~/projects/${DIR}/analysis/${NAME}_${VERSION} -log ${NAME}_${VERSION}.log --location ${NAME} --strict -keepIntermediates --not_stranded -single_end -run

Notice that the “--input” is the file ${NAME}_${VERSION}.txt, which

translates to singlecell_pnms_se_v3.txt in this case, since

NAME=singlecell_pnms_se and VERSION=v3 are defined at the beginning of

the file. This file is the “manifest” of the sequencing run. In the case of

single-end reads, this is a file where each line has,

/path/to/read1.fastq.gz\tspecies\n,

where \t indicates a tab (using the <TAB> character), and \n

indicates a new line, created by <ENTER>. Here is the first

10 lines of singlecell_pnms_se_v3.txt (obtained via

head singlecell_pnms_se_v3.txt):

/home/obotvinnik/projects/singlecell_pnms/data/CVN_01_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_02_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_03_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_04_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_05_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_06_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_07_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_08_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_09_R1.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/CVN_10_R1.fastq.gz hg19

For paired-end, not strand-specific RNA-seq, here’s the script of the file

singlecell_pnms_pe_v3.sh

#!/bin/bash

NAME=singlecell_pnms_pe

VERSION=v3

DIR=singlecell_pnms

java -Xms512m -Xmx512m -jar /projects/ps-yeolab/software/gatk/dist/Queue.jar -S $HOME/gscripts/qscripts/analyze_rna_seq.scala --input ${NAME}_${VERSION}.txt --adapter TCGTATGCCGTCTTCTGCTTG --adapter ATCTCGTATGCCGTCTTCTGCTTG --adapter CGACAGGTTCAGAGTTCTACAGTCCGACGATC --adapter GATCGGAAGAGCACACGTCTGAACTCCAGTCAC -qsub -jobQueue home-yeo -runDir ~/projects/${DIR}/analysis/${NAME}_${VERSION} -log ${NAME}_${VERSION}.log --location ${NAME} --strict -keepIntermediates --not_stranded -run

Notice that the “--input” is the file ${NAME}_${VERSION}.txt, which

translates to singlecell_pnms_pe_v3.txt in this case, since

NAME=singlecell_pnms_pe and VERSION=v2 are defined at the beginning of

the file. This file is the “manifest” of the sequencing run. In the case of

single-end reads, this is a file where each line has:

read1.fastq.gz;read2.fastq.gz\tspecies\n,

where \t indicates a tab (using the <TAB> character), and \n

indicates a new line, created by <ENTER>. Here is the first

10 lines of singlecell_pnms_pe_v3.txt (obtained via

head singlecell_pnms_pe_v3.txt):

/home/obotvinnik/projects/singlecell_pnms/data/M1_01_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_01_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_02_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_02_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_03_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_03_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_04_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_04_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_05_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_05_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_06_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_06_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_07_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_07_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_08_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_08_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_09_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_09_R2.fastq.gz hg19

/home/obotvinnik/projects/singlecell_pnms/data/M1_10_R1.fastq.gz;/home/obotvinnik/projects/singlecell_pnms/data/M1_10_R2.fastq.gz hg19

For this project, I had a mix of both paired-end and single-end reads, so

that’s why DIR is the same for both the singlecell_pnms_se_v3.sh and

singlecell_pnms_pe_v3.sh scripts, but NAME was different - then they’re

saved in different folders.

Running GATK Queue pipeline scripts¶

Now that you’ve created manifest file called ${NAME}_${VERSION}.txt and

${NAME}_${VERSION}.sh, you are almost ready to run the pipeline.

Note

You should be using screen quite often now. You’ll want to run your

pipeline in a screen session, because then even when you log out of

TSCC, the pipeline will still be running.

If you’ve already run screen, reattach the session with:

screen -x

If that gives you the error: There is no screen to be attached., then

you haven’t run screen before, and you can start a session with:

screen

These scripts take quite a bit of memory to compile, so to be nice to everyone, log into a compute node by requesting an interactive job on TSCC. Also your script may just run out of memory and fail if you’re not a compute node, so that is even more incentive to log into a compute node!

This command will create an interactive job for 40 hours, on the home-scrm

queue, and with 1 node and 1 processor (you don’t need more than that for the

script, and the script will submit jobs that request more nodes/processors for

compute-intensive stuff like STAR or Sailfish). If you have a lot of samples,

you may need more time, but try just 40 hours first.

So here’s what you do:

qsub -I -l walltime=40:00:00 -q home-scrm

# Wait for the job to be ready. This may take a while

cd ~/projects/$NAME/scripts

sh ${NAME}_${VERSION}.sh

For example, for the singlecell_pnms project from before, I would do:

qsub -I -l walltime=40:00:00 -q home-scrm

# Waited for job to get scheduled/be ready ....

cd ~/projects/singlecell_pnms/scripts

sh singlecell_pnms_se_v3.sh

This outputs:

INFO 12:24:42,840 QScriptManager - Compiling 1 QScript

INFO 12:24:55,100 QScriptManager - Compilation complete

INFO 12:24:55,359 HelpFormatter - ----------------------------------------------------------------------

INFO 12:24:55,359 HelpFormatter - Queue v2.3-1095-gdb26a3f, Compiled 2015/01/26 15:22:32

INFO 12:24:55,359 HelpFormatter - Copyright (c) 2012 The Broad Institute

INFO 12:24:55,359 HelpFormatter - For support and documentation go to http://www.broadinstitute.org/gatk

INFO 12:24:55,360 HelpFormatter - Program Args: -S /home/obotvinnik/gscripts/qscripts/analyze_rna_seq.scala --input singlecell_pnms_se_v3.txt --adapter TCGTATGCCGTCTTCTGCTTG --adapter ATCTCGTATGCCGTCTTCTGCTTG --adapter CGACAGGTTCAGAGTTCTACAGTCCGACGATC --adapter GATCGGAAGAGCACACGTCTGAACTCCAGTCAC -qsub -jobQueue home-yeo -runDir /home/obotvinnik/projects/singlecell_pnms/analysis/singlecell_pnms_se_v3 -log singlecell_pnms_se_v3.log --location singlecell_pnms_se --strict -keepIntermediates --not_stranded -single_end -run

INFO 12:24:55,360 HelpFormatter - Date/Time: 2015/01/27 12:24:55

INFO 12:24:55,360 HelpFormatter - ----------------------------------------------------------------------

INFO 12:24:55,361 HelpFormatter - ----------------------------------------------------------------------

INFO 12:24:55,370 QCommandLine - Scripting AnalyzeRNASeq

INFO 12:24:58,436 QCommandLine - Added 773 functions

INFO 12:24:58,438 QGraph - Generating graph.

INFO 12:24:58,664 QGraph - Running jobs.

... more output ...

Pipeline frequently asked questions (FAQ)¶

How do I ...¶

- ... deal with multiple species? Do I have to create different manifest files?

- Fortunately, no! You can create a single manifest file.

Looking at /home/gpratt/projects/msi2/scripts, we see the file

msi2_v2.txt, which has the contents:

/home/gpratt/projects/msi2/data/msi2/MSI2_ACAGTG_ACAGTG_L008_R1.fastq.gz hg19

/home/gpratt/projects/msi2/data/msi2/MSI2_CAGATC_CAGATC_L008_R1.fastq.gz mm9

/home/gpratt/projects/msi2/data/msi2/MSI2_GCCAAT_GCCAAT_L008_R1.fastq.gz mm9

/home/gpratt/projects/msi2/data/msi2/MSI2_TAGCTT_TAGCTT_L008_R1.fastq.gz hg19

/home/gpratt/projects/msi2/data/msi2/MSI2_TGACCA_TGACCA_L008_R1.fastq.gz hg19

/home/gpratt/projects/msi2/data/msi2/MSI2_TTAGGC_TTAGGC_L008_R1.fastq.gz hg19

So you can reference multiple genomes in a single manifest file!

- ... deal with both single-end and paired-end reads in one project? Do I need to create separate manifest files?

- Yes, unfortunately. :( Check out the

singlecell_pnmsproject above as an example. - ... see the documentation for a queue script?

- This command will show documentation for

analyze_rna_seq.scala. For further documentation, see the GATK Queue website.

java -Xms512m -Xmx512m -jar /projects/ps-yeolab/software/gatk/dist/Queue.jar -S ~/gscripts/qscripts/analyze_rna_seq.scala

analyze_rna_seq¶

The queue script analyze_rna_seq.scala runs or generates:

- RNA-SeQC

- cutadapt

- miso

- OldSplice

- Sailfish

- A->I editing predictions

- bigWig files

- Counts of reads mapping to repetitive elements

- Estimates of PCR Duplication

Detailed description of analyze_rna_seq.scala outputs.